Quality Management in Higher Education

Is Student Feedback the Cornerstone of Quality Assurance?

Luís Carvalho

Introduction

Even though the driving forces that shape what, how and why students should learn in universities didn’t reach a common agreement in societies, Universities have a core and indisputable mission of providing formal post-secondary education (Collini, 2012). Therefore, if students enrol HEIs to acquire a set of knowledge, skills and competences, there is a consequent requirement for HEI - that happens to be also their mission - to assure that during graduation, students undergo a process of change where an educational gain is necessary. Arum and Roksa (2011, p. 204) recently publish an extensive study in USA claiming that undergraduate students undergo their studies making little progress at “critical thinking, complex reasoning, and written communication”, setting apprehension and rising doubts on the efficiency of HEIs as reference educational institutions.

In Europe, recent trends in organization of HEIs are paying increasingly attention to Quality Assurance (QA), hence HEIs are requested to develop strategies and procedures to assure and enhance the quality of their activities (Amaral, 2012). To this end, internal quality systems were implemented in HEIs to improve, among other things, teaching quality. Additionally, they need to incorporate the voice of students and other stakeholders in the quality process (ENQA 2009). To evaluate teaching, and conversely student’s learning, QA units developed methods to collect feedback from students’ educational experiences, namely their “perceptions about the learning and teaching” (Harvey, 2001).

Even though Student Feedback (SF) has been proved as a valuable tool to improve teaching (Kane et al. 2008) it includes limitations, e.g. it doesn’t provides direct information on learning (Brennan & R. Williams 2004). The existence of limitations claims for additional approaches to evaluate and enhance the quality of the educational experience, namely the development of a systematic approach of assessment of learning, aiming to monitor and support the progress of students.

Hence, in this paper I’ll make the point that:

1 – Student Feedback needs to be supplemented with a well-defined system of assessment of student’s learning in order to improve the internal quality of teaching.

2 – At the institution level, Quality Assurance procedures should define policies for assessment of students’ learning and support teachers to adopt effective practices of assessing learning.

In this essay, I will summarize relevant issues described in the literature relating to Student Feedback and Student Assessment (SA)to support the claim that QA units need to be focused on both, with comparable levels of investment.

Student Feedback: looking to the voice of students.

Student Feedback includes the information collected to characterize students’ views and perceptions about the educational experience. As a tool to collect data, SF serves two purposes in HEIs: to guide internal improvement of teaching and learning, and to generate information easily accessible for external stakeholders (Harvey 2001).

The high number of students enrolling HEIs calls for instruments both practical and easy to administer. Harvey (2001) describes that an array of surveys is used to collect students’ feedback, ranging from institution-wide surveys to more focused module-level surveys. Consequently, SF surveys manage to collect a relatively big amount of information that otherwise would be difficult to acquire in a single moment: “perceptions about the learning and teaching, the learning support facilities (such as, libraries, computing facilities), the learning environment, (lecture rooms, laboratories, social space and university buildings), support facilities (refectories, student accommodation, health facilities, student services) and external aspects of being a student (such as finance, transport infrastructure)” (Harvey, 2001, p.2).

The increasingly attention to student-centred approaches, reinforces the importance of students’ voice in the process of quality assurance. Naturally, with students in a pivotal position in the learning experience, both as active participants but also observers, demands that their views should have a considerable weight in the decision-making processes regarding teaching procedures and strategies (Brennan & R. Williams 2004). Richardson (2005) reviewed a series of empirical studies in North America, collecting evidences that students’ views about teacher’s performance were reliable (i.e. high test-retest reliability, high interrater reliability). In fact, the studies showed that students’ were consistent when rating a specific teacher, even if these students were taught by the teacher in different courses. Additionally the consistency of students’ perceptions was stable along time.

Although the use Student Feedback is widespread in HEIs (Richardson 2005), feedback gathered from students is not being used to foster improvement (Harvey, 2001) Moreover, this lack of action to use data, and to inform students on the impact on the collection on their views, can act as a waste of time that weakens the willingness to students to participate in the process (Harvey 2001; Roxå and Mårtensson 2010; Williams, 2010).

In addition to the previous point, there are two issues that hinder the reliability of SF. First, student feedback provides indirect data regarding learning, i.e. students reflect and express their views on the learning experience (auto-perception of learning). Thus, SF does not assess factual learning, neither perceived learning can be undoubtedly correlated with factual learning (Brennan & R. Williams 2004). Second, since SF takes place during the end of the learning experience (usually in the end of the semester), SF does not provides a direct benefit to a student that is taking a specific module, even though the SF can be used to improve the respective teaching/course in the future. Moreover, the time frame where this collection of student feedback happens, can negatively influence student’s response, or even decrease the response rate (Johnson, 2003).

Considering the limitations of SF to assess the educational experience in which students undergo, a comprehensive system of assessment of students’ learning should be presented as a supplement to SF in order to assure quality of learning.

Student Assessment: looking through the mind of students.

In Universities, teachers use a diverse set of instruments to assess their students’ learning, such as exams, essays, group presentations and discussions. When discussing Student Assessment, Knight (2001) points out that special attention should be given to a common misconception: the use of assessment and measurement interchangeably, since only the latter is an exact process, which means that assessment by definition has limited precision.

Nonetheless, Pintrich (2002) explains that assessing learners provides them with an opportunity to reflect on their own learning (metacognition), therefore this dimension of knowledge, i.e. to think about one’s own thinking, is a valuable asset to consider when designing assessment instruments, because students that are aware of their own thinking and learning strategies, will learn better. A specific assessment, e.g. multiple question test, will influence the way students embrace their study, being for example more focused on memorization rather understanding. Therefore, assessment planning plays a role in what students learn, because it helps them to guide their learning, and also to reflect on their own strengths and weaknesses. Finally, when assessment methods are not aligned with learning objectives and teaching strategies, there is a risk that assessment becomes counter-productive and time consuming resource, and in that way hindering learning (Pintrich, 2002).

Moreover, as opposed to SF, assessment of students learning is a direct approach to collect data regarding learning achievement. In that sense, it can provide information about how learners are making progress in relation to the intended learning objectives. Additionally, SA produces useful internal information to improve effectiveness of teaching (ENQA, 2009). The risk of not including, at the institution level, an effective plan assess what students learn during their studies, is demonstrated in recent empirical research from Arum and Roksa (2011), where the authors conducted an extensive study, with 3000 undergraduate students from 29 HEIs, to evaluate how much are students learning in HE. The authors claim that after 2 and 4 years of graduation, 45% and 36% of participant students respectively, didn’t make any significant improvement in learning. In their, learning was assessed in analysing progression of critical thinking, complex reasoning, and writing skills in participant undergraduates.

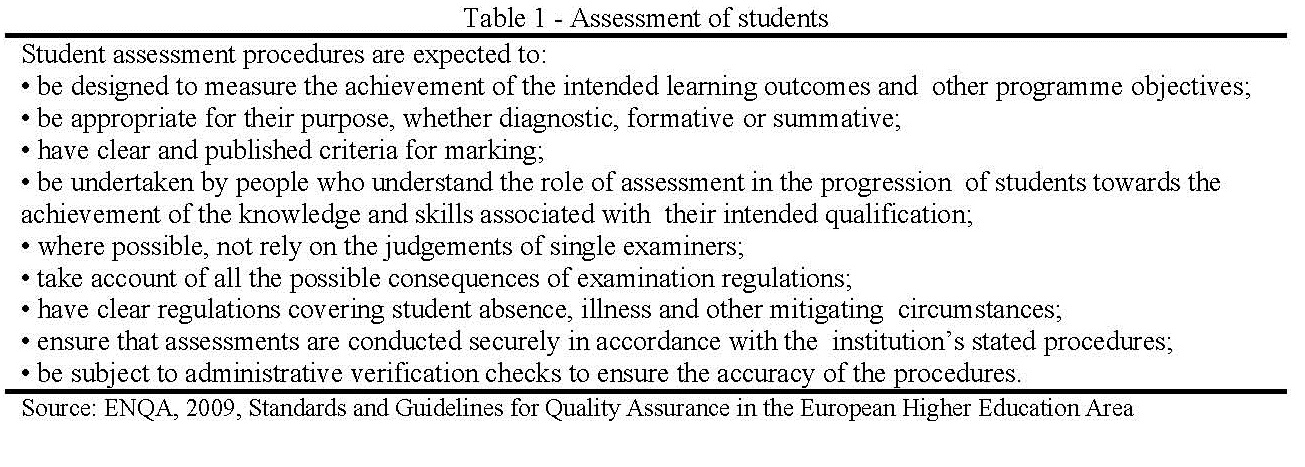

Despite de advantages of SA, there are still challenging limitations to consider. In a comprehensive description on the limitations of summative assessment of students, Knight (2002, p.2) argues that they are “are unreliable and routinely mismanipulated, incomplete and generally uninformative representations of student achievements”. Furthermore, he explains that complex learning outcomes (e.g. problem-solving skills), demand complex assessment systems. Also, increasing complexity of assessment methods is positively correlated with uncertainty on the accuracy on the assessment (complexity theory, Thrift (1999), as cited in Knight, 2002), which by its nature, it’s never exact. As a result, there is a challenge to make faculty aware of this constraint, and QA needs to play a role to reduce it, defining clear polices and orientations. Table 1 includes all the guidelines regarding student assessment published by ENQA (2009) in the overarching report Standards and Guidelines for Quality Assurance in the European Higher Education Area. In the ENQA document it appears that the coverage to student assessment seems rather brief.

Conclusion

In this essay I argued that to accurately characterize the learning experience a balance must be found in the use of student feedback and student assessment. Because both methodologies present limitations (Knight, 2002; Johnson, 2003), the quality assurance units need to define and implement binding policies where students’ views about learning are put into action to change instruction. Additionally, learners’ perspectives should be supplemented with direct assessment of student’s learning. Again, QA units need to guide guidelines and training, to implement, at the institution level, rigorous assessment methods that provide indicators on the efficiency of teaching, but also help students to self-monitor their progress.

Student Feedback generally fails to enhance improvement in HEIs because action is not being taken with collected data (Harvey, 2001). Moreover, SF processes follow methodologies that hinder the direct benefit of the learners from which information is being collected. First, information is usually collected in the end of semester, when the learning process has ended, and second, students don’t get returning information on their feedback to the system, i.e. feedback on feedback.

To complement the diagnostic dimension of Student Feedback, Student Assessment can be used to provide information on factual learning. Hence, it’s important to improve the assessment of students’ performance, namely at a formative level, to enhance more accountability in the learning process. Additionally, collecting assessment data to monitor learning can provide basis to improve instruction. In this sense, learning should be a priority of the institution, and if learning outcomes cannot be measured (exact) (Knight, 2002), that doesn’t imply that assessment should not take place to produce data devoted to improve instruction (Arum & Roksa 2011). Assessment of performance of students needs to be done at a continuous level, comparing starting and ending points, monitoring changes, fostering instructional and curriculum improvement.

This paper mentions the methodological imperfections of assessment procedures (e.g. reliability) (Knight, 2002), and while not proving a solution or alternatives, there is space to affirm that while QA units are making sustainable and long existing efforts to improve the SF (Kane et al. 2008), a parallel effort has to be done with SA, making an increased investment in training teachers to improve their knowledge and skills in how to assess students, and support them with clear guidelines on the strengths and weaknesses of different assessment methods, and definition of clear criteria (e.g. rubrics) to define performance levels and re-define policies to decrease subjectivity in assessment (e.g. more assessors involved in the processes).

The discussion on the future of universities includes many controversial points, e.g. privatization, student fees, accountability to stakeholders, academic freedom (Collini, 2012), nonetheless, I cannot see a future where Universities disregard their sine qua non nature of being learning centred institutions. Therefore, Universities need to make better use of their students’ voices, but they are still the first to be accountable to assure that learning is taking place. To that end, quality assurance needs to build, as a cornerstone element of its strategy, an effective action plan to improve a rigorous culture of Student Feedback and Student Assessment in Universities.

References

Amaral, A., 2012, Recent Trends in Qualiy Assurance. In A3ES and Cipes Conference. Porto: A3ES, pp. 1–19.

Arum, R. & Roksa, J., 2011. Limited Learning on College Campuses. Society, 48(3), pp.203–207. Available at: http://www.springerlink.com/index/10.1007/s12115-011-9417-8 [Accessed November 5, 2012].

Brennan, J. & Williams, R., 2004. Collecting and using student feedback. A guide to good practice, Learning and Teaching Support Network

Collini, S., 2012, What are Universities For? (pp. 6). Penguin UK. Kindle Edition.

ENQA, 2009, Standards and Guidelines for Quality Assurance in the European Higher Education Area, Helsinki.

Harvey, L., 2001, Student feedback. A report to the Higher Education Funding Council for England (Birmingham, University of Central England, Centre for Research into Quality).

Johnson, T. ,2003. Online student ratings: Will students respond? New Directions for Teaching and Learning, 96, pp. 49–59.

Kane, D., Williams, J. & Cappucini-Ansfield, G., 2008. Student Satisfaction Surveys: The Value in Taking an Historical Perspective. Quality in Higher Education, 14(2), pp.137–158.

Pintrich, P. R., (2002), The Role of Metacognitive Knowledge in Learning, Teaching, and Assessing. Theory into practice, Volume 41, Number 4

Richardson, J.T.E., 2005, Instruments for obtaining student feedback: a review of the literature. Assessment & Evaluation in Higher Education, 30(4), pp.387–415. Available at: http://www.tandfonline.com/doi/abs/10.1080/02602930500099193 [Accessed October 7, 2012].

Torgny Roxå T. and Mårtensson K., 2010, Improving university teaching through student feedback: a critical investigation, In Chenicheri Sid Nair, C. S. and Mertova, P., 2010, Student Feedback The cornerstone to an effective quality assurance system in higher education, Chandos Publishing, Oxford. Ch. 4.